For the human language concept, see Language perception.

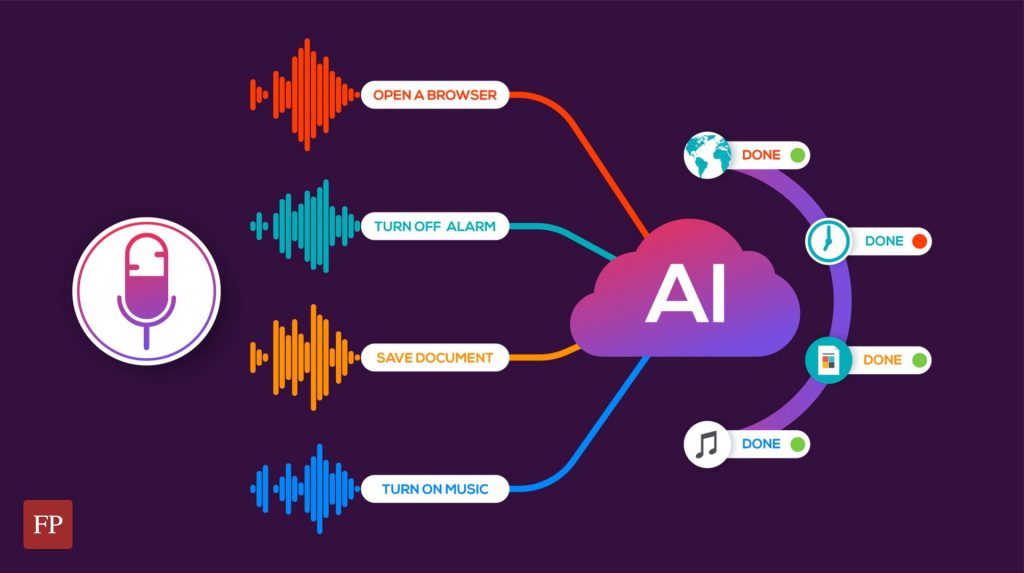

s an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that allow computers to recognize and translate spoken language into text. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It includes knowledge and research in computer science, linguistics and computer engineering fields.

Some speech recognition systems require “training” (also called “enrollment”) when an individual speaker reads text or isolated vocabulary into the system. The system analyzes a person’s specific voice and uses it to fine-tune that person’s speech recognition, resulting in increased accuracy. Systems that do not use learning are called “speaker-independent” systems. Systems using learning are called “speaker dependent”.

Speech recognition applications include voice user interfaces such as voice dialing (e.g. “call home”), call routing (e.g. “I’d like to make a collection call”), home automation device control, keyword search (e.g. podcast search where certain words were spoken), simple data entry (e.g., entering a credit card number), preparation of structured documents (e.g., a radiology report), speaker characterization, speech-to-text processing (e.g., word processors or e-mails), and aircraft (usually called direct voice input).

The term voice recognition or speaker identification refers to the identification of the speaker, not what they are saying. Speaker recognition can simplify the task of speech translation in systems that have been trained on a specific person’s voice, or it can be used to authenticate or verify a speaker’s identity as part of a security process.

From a technological perspective, speech recognition has a long history with several waves of major innovations. More recently, the field has benefited from advances in deep learning and big data. The achievement is evidenced not only by the surge of academic papers published in the field, but more importantly, by the global industry’s adoption of various deep learning methods in the development and implementation of speech recognition systems.

History

Key areas of growth were: vocabulary size, speaker independence, and processing speed.

Until 1970

1952 – Three Bell Labs researchers, Stephen Balaszek, R. Biddulph, and C. H. Davis, built a system called “Audrey” for single-speaker digit recognition. Their system is located on formants in the power spectrum of each utterance.

1960 – Gunnar Fant developed and published the source-filter model of speech production.

1962 – IBM demonstrated its 16-word “Shoebox” speech recognition machine at the 1962 World’s Fair.

1966 – Linear intelligent coding (LPC), a speech coding method, was first proposed by Fumitada Itakura of Nagoya University and Shuzo Saito of Nippon Telegraph and Telephone (NTT), while working on speech recognition.

1969 – Funding at Bell Labs dried up for several years when the influential John Peirce wrote an open letter in 1969 criticizing and triggering speech recognition research. This advocacy continued until Pierce retired and James L. Flanagan took over.

Raj Reddy was the first person to undertake permanent speech recognition as a graduate student at Stanford University in the late 1960s. Previous systems required users to pause after each word. Reddy’s system issued voice commands to play chess.

Around this time, Soviet researchers invented dynamic time warping (DTW) and used it to create a recognizer capable of operating on a 200-word vocabulary. DTW processed speech by dividing it into short frames, e.g. 10ms segments, and processing each frame as a single unit. Although DTW will be superseded by later algorithms, the technique continues. At that time, the achievement of the speaker’s independence remained unresolved.

1970–1990

1971 – DARPA funds five years of Language Comprehension Research, language recognition research looking for a minimum vocabulary size of 1,000 words. They thought speech understanding would be key to progress in speech recognition, but this later proved to be false. BBN, IBM, Carnegie Mellon, and the Stanford Research Institute all participated in the program. This reinvigorated research into John Peirce’s letter speech recognition research.

1972 – The IEEE Group on Acoustics, Speech and Signal Processing held a conference in Newton, Massachusetts.

1976 The first ICASSP was held in Philadelphia, which has been the main venue for the publication of speech recognition research ever since.

In the late 1960s, Leonard Baum developed the mathematics of Markov Chains at the Institute for Defense Analysis. A decade later at CMU, Raj Reddy’s students James Baker and Janet M. Baker began using the Hidden Markov Model (HMM) for speech recognition. James Baker learned about HMM from a summer job at the Defense Analysis Institute during his undergraduate education. The use of HMMs allowed researchers to combine different sources of knowledge, such as acoustics, language, and syntax, into a single probabilistic model.

By the mid-1980s, Fred Jelinek’s IBM team created a voice machine called Tangora that could process a dictionary of 20,000 words. Jelinek’s statistical approach places less emphasis on mimicking the way the human brain processes and understands language in favor of using statistical modeling techniques, such as HMM. (Jelinek’s group independently discovered the application of HMMs to language.) This has been controversial to linguists because MMMs are too simplistic to account for many common features of human languages. However, HMM proved to be an extremely useful way of modeling speech and replaced dynamic time warping to become the dominant speech recognition algorithm in the 1980s.

1982 – Dragon Systems, founded by James and Janet M. Baker, was one of IBM’s few competitors.Encyclopedia site:ewikiuk.top

Practical speech recognition

In the 1980s, the n-gram language model was also introduced.

1987 – The backward model allowed language models to use n-grams of multiple length and CSELT used HMMs for language recognition (both in software and in hardware specialized processors, e.g. RIPAC).

Significant progress in this field is due to the rapidly growing capabilities of computers. At the end of the DARPA program in 1976, the best computer available to researchers was a PDP-10 with 4 MB of RAM. Decoding just 30 seconds of speech can take up to 100 minutes.

Two practical products:

1987 – recognizer from Kurzweil Applied Intelligence

1990 – Dragon Dictate, a consumer product launched in 1990. AT&T in 1992 deployed a voice-recognition call processing service to route phone calls without the use of a human operator. The technology was developed by Lawrence Rabiner and others at Bell Labs.

At this point, the vocabulary of a typical commercial speech recognition system was larger than the average human vocabulary. Raja Reddy’s former student, Xuedong Huang, developed the Sphinx-II system at KMU. The Sphinx-II system was the first to do speaker-independent large-vocabulary, continuous speech recognition, and it had the best performance in a 1992 DARPA evaluation. Processing continuous speech with a large vocabulary was an important milestone in the history of speech recognition. Huang found a speech recognition group in In 1993, Raj Reddy’s student Kai-Fu Lee joined Apple, where in 1992 he helped develop a prototype speech interface for an Apple computer known as Casper.

Lernout & Hauspie, a Belgian speech recognition company, acquired several other companies, including Kurzweil Applied Intelligence in 1997 and Dragon Systems in 2000. The L&H speech technology was used in the Windows XP operating system. L&H was an industry leader until an accounting scandal brought the company to a standstill in 2001. L&H’s language technology was acquired by ScanSoft, which became Nuance in 2005. Apple originally licensed software from Nuance to provide speech recognition capabilities to its digital assistant Siri. Encyclopedia site:ewikiuk.top

2000s

In the 2000s, DARPA sponsored two speech recognition programs: Efficient Affordable Multiple Speech-to-Text (EARS) in 2002 and Global Autonomous Language Exploitation (GAIL). Four teams participated in the EARS program: IBM, a team led by BBN from LIMSI and Univ. of Pittsburgh, the University of Cambridge, and a team consisting of ICSI, NRI, and the University of Washington. EARS funded the telephone archive collection of the telephone exchange, containing 260 hours of recorded conversations from more than 500 speakers. The GALE program focused on Arabic and Mandarin news broadcasting. Google’s first efforts in speech recognition were made in 2007 after hiring some researchers from Nuance. The first product was the GOOG-411, a telephone reference service. The records from GOOG-411 provided valuable data that helped Google improve their recognition systems. Google Voice Search is now supported in more than 30 languages.

In the United States of America, the National Security Agency has used a type of speech recognition to extract keywords since at least 2006. This technology allows analysts to search through large volumes of recorded conversations and isolate mentions of key words. Records can be indexed, and analysts can run database queries to find interesting conversations. Some government research programs have focused on speech recognition intelligence applications, such as DARPA’s EARS Program and IARPA’s Babel Program.

In the early 2000s, speech recognition was still dominated by traditional approaches such as Hidden Markov Models combined with feed-forward artificial neural networks. However, today many aspects of speech recognition have been taken over by deep learning, a method called Long Short-Term Memory (LSTM), a recurrent neural network was published by Sepp Hochreiter & Jürgen Schmidhuber in 1997. LSTM RNNs avoid the vanishing gradient problem and can learn “Very Deep Learning” tasks that require memories of events that occurred over thousands of discrete time steps, which is important for speech. Around 2007, LSTMs trained in Connectionist Temporal Classification (CTC) began to outperform traditional speech recognition in certain applications. In 2015, Google’s speech recognition system reportedly saw a significant jump of 49% thanks to CTC-trained LSTMs, which are now available through Google Voice to all smartphone users.

The use of deep forward (non-repetitive) networks for acoustic modeling was introduced later in 2009 by Jeffrey Hinton and his students at the University of Toronto and Li Deng and his colleagues at Microsoft Research, initially in a joint effort between Microsoft and the University of Toronto. which was later expanded to include IBM and Google (hence the subtitle “Joint views of four research groups” in their 2012 review article). Microsoft’s head of research called this innovation “the most dramatic change in accuracy since 1979”. In contrast to the continuous incremental improvement over the past few decades, the application of deep learning has reduced the word error rate by 30%. This innovation was quickly adopted throughout the industry. Researchers have also begun using deep learning techniques to model language.

Over the long history of speech recognition, both fine-grained and deep forms (such as recurrent networks) of artificial neural networks have been explored for many years in the 1980s, 1990s, and a few years into the 2000s. But these methods have never overcome the unevenness of the internal handiwork Gaussian Mixture Model/Hidden Markov Model (GMM-HMM) technology based on generative speech models trained discriminatively. A number of key challenges were methodologically analyzed in the 1990s, including gradient descent and the weak temporal structure of correlation in neural predictive models. All these difficulties were in addition to the lack of large training data and large computing power in these early days. Most speech recognition researchers who understood such barriers subsequently moved away from neural networks to generative modeling approaches, until the recent resurgence of deep learning since 2009–2010, which has overcome all these difficulties. Hinton et al. and Deng et al. reviewed some of this recent history of how their collaborations among themselves and then with colleagues from four groups (University of Toronto, Microsoft, Google, and IBM) ignited a renaissance in applications of deep feedforward neural networks for speech recognition.Encyclopedia site:ewikiuk.top

2010s

By the early 2010s, speech recognition, also called voice recognition was clearly differentiated from speaker recognition and speaker independence was considered a major breakthrough. Until then, systems required a “learning” period. The doll’s 1987 ad featured the tagline, “Finally, a doll that understands you.” – despite this being described as “what children could learn to respond to their voice”.

In 2017, Microsoft researchers achieved a historic milestone of human parity – transcribing spoken telephone language for a widely distributed switchboard task. Several deep learning models were used to optimize speech recognition accuracy. Speech recognition error rates were reported to be lower than 4 professional human transcribers working together on the same test funded by the IBM Watson language team for the same task. Encyclopedia site:ewikiuk.top