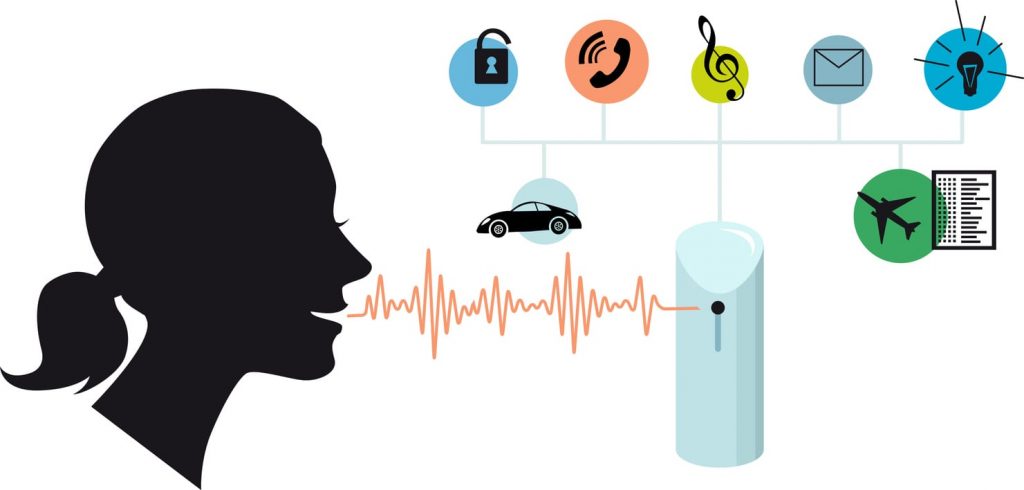

Speech recognition (English speech recognition) or speech-to-text (English speech to text (STT)) is the process of converting a speech signal into a text stream. It should not be confused with the definition of speech recognition, since “recognize a speech” directly means only to give an answer to the question of which language a segment of the speech signal belongs to. It is often used in a set of technologies that make it possible to control a computer using the human voice, enter information by voice, dictate, transcribe (stenograph) phonograms.

Content

- History

- Recognition quality

- Notes

- See also

- Literature

- Links

The first device for recognizing spoken language appeared in 1952, it could recognize numbers spoken by a person. In 1964, the IBM Shoebox device was presented at the computer technology fair in New York.

Commercial programs for speech recognition appeared in the early nineties. They are usually used by people who are unable to type large amounts of text due to hand injuries. These programs (for example, Dragon Naturally Speaking, VoiceNavigator) translate the user’s voice into text, thus relieving his hands. The translation reliability of such programs is not very high, but it gradually improves over the years.

The increase in the computing power of mobile devices has made it possible to create programs with the function of recognizing spoken language for them as well. Among such programs, it is worth noting Microsoft Voice Command, which allows you to work with many application programs using your voice. For example, you can enable music playback in the player or create a new document.

Apple Macintosh computers have a built-in Speech function in the system settings that can analyze user commands when a certain key is pressed, or if the user command is preceded by a keyword.

Another interesting program is Speereo Voice Translator — a voice translator. SVT is able to recognize phrases spoken in English and “speak” back a translation in one of the selected languages.

For the Ukrainian language, the development of speech recognition is known, which makes it possible to enter text by voice. This system works with a dictionary of more than 100,000 words. It can be downloaded and used to dictate texts of medium complexity.

Intelligent language application programs that allow automatic synthesis and recognition of spoken language are the next stage in the development of interactive voice IVR systems. Using interactive phone software nowadays[when?] is not a fad, but a necessity. Reducing the burden on contact center operators and secretaries, reducing labor costs and increasing the productivity of service systems – these are just some of the benefits that prove the feasibility of such programs.

However, progress does not stand still, and recently, automatic speech recognition and speech synthesis systems are increasingly being used in interactive telephone programs. In this case, communication with the voice portal becomes more natural, since the choice in it can be made not only by dialing, but also by voice commands. At the same time, recognition systems are independent of announcers, that is, they recognize the voice of any person. The main advantage of voice systems is friendliness to the user — he gets rid of the need to wade through complex and confusing labyrinths of voice menus. Now it is enough to say the purpose of the call, after which the voice system will automatically move the caller to the desired menu item.

The next step in speech recognition technologies can be considered the development of the so-called Silent Speech Interfaces (SSI). These speech processing systems are based on the acquisition and processing of speech signals at an early stage of articulation. This stage of the development of speech recognition is caused by two significant defects of modern recognition systems: excessive sensitivity to noise, as well as the need for a clear and clear pronunciation when addressing the recognition system. The approach based on SSI is to use new sensors that are not affected by noise as a supplement to the processed acoustic signals.

As of 2016, development of speech recognition and synthesis from Microsoft provides recognition quality close to human (5.9% errors vs. 5.1%) and is able to determine the context (sports, computers, etc.). In 2017, IBM achieved an error rate of 5.5%.

Davies , K.H., Biddulph, R. and Balashek, S. (1952) Automatic Speech Recognition of Spoken Digits, J. Acoust. Soc. Am. 24(6) pp. 637 – 642

- Long short-term memory

- Pattern recognition task

- Artificial Intelligence

- Voice control

- OCR

- The McGurk effect

- Silent Speech Interfaces (SSI)

- Voice search

- VoiceXML

- Informativeness of signs

T.K. Vintsyuk Analysis, recognition and semantic interpretation of speech signals. — Kyiv. Scientific opinion, 1987.

Methods of automatic object recognition: In 2 books. Trans. with English./Under the editorship. U. Li. — M.: Mir, 1983. — Book. 1. 328 p., ill.

Synthesis and object recognition. Contemporary solutions: A.V. Frolov, G.V. Frolov.